Experiments Overview

Test Paywall pricing and UI elements without updating

Utilize A/B Experiments to enhance your app's revenue generation. Determine the most effective combination of products on your paywall and the optimal pricing for each in-app purchase to maximize revenue. Customize and test various paywall configurations using a JSON configuration.

Overview

- Experiment Modes: Conduct Paywall experiments in two distinct modes: 'Standalone' or 'Within Placements'.

- Variation Support: Support for up to 5 variations, enabling comprehensive A/B/C/D/E testing.

- Custom JSON Configurations: Each variation can be customized with a unique JSON configuration, allowing for modifications in user interface and other elements.

- SDK Integration: Retrieve the name of the experiment for each user directly from the SDK.

- Base Variation Editing: Edit the base variation (Variation A) to establish a standard for comparison.

- Traffic Allocation: Allocate traffic to each variation as per requirement, allowing for controlled exposure and accurate data collection.

- Targeted Experiments: Conduct experiments tailored to custom audiences, such as specific countries, app versions, or other user segments.

Experiments within Placements

Enhance your approach with the new 'Placements' feature. This allows for the strategic management of paywall appearances within different sections of the app, such as onboarding, settings, etc., targeting specific audiences. Execute A/B experiments on selected paywalls to optimize conversion rates for particular placements and user groups. See more details below.

Experiments Priority

You can run multiple experiments on your paywalls simultaneously.

For both standalone and placement experiments, the latest experiment takes priority.

For instance, if a developer sets up an Onboarding Placement with paywalls and multiple experiments are created for overlapping audiences, the system will assign each overlapping user exclusively to the most recently launched experiment.

This approach ensures clarity in data analysis and avoids potential confusion from a user being part of multiple concurrent tests.

Experiment Predictions

Experiment Predictions are available if LTV Predictions feature has been added to your app. For more information follow Experiment Predictions guide.

Managing Experimented Paywalls in Your App

Integrating experimented paywalls in your app is straightforward but requires attention to detail regarding how you access paywalls from the SDK. Here's how to proceed:

Choose the Appropriate Experiment Mode: Your choice of experiment mode should align with your app's paywall implementation. If your app uses standalone paywalls, conduct A/B experiments on these standalone versions. Conversely, if your app utilizes paywall placements, your experiments should be within these placements.

Access Paywalls Correctly: It's crucial to retrieve paywalls correctly based on your chosen feature. If your app uses the placements feature, you must obtain the paywall object directly from the corresponding placement object. Avoid using the standalone paywalls array from the SDK in this context. Fetching a paywall through Apphud.paywalls() in a placement-based setup will disrupt your analytics, as purchases won't be accurately attributed to the respective placement.

Understand SDK Behavior with Experimented Paywalls: Be aware that the SDK's treatment of experimented paywalls varies based on the type of experiment. If you run an A/B experiment on a paywall within a placement, only that specific placement will return the modified version of the target paywall. Other areas, including the standalone paywalls array and other placements, will continue to present the original paywall. Similarly, experimenting on a standalone paywall will not affect the representation of that paywall within any placements.

Create Experiment

While creating the experiment, you can set up a bunch of parameters. Read the detailed explanation below.

Experiment Setup

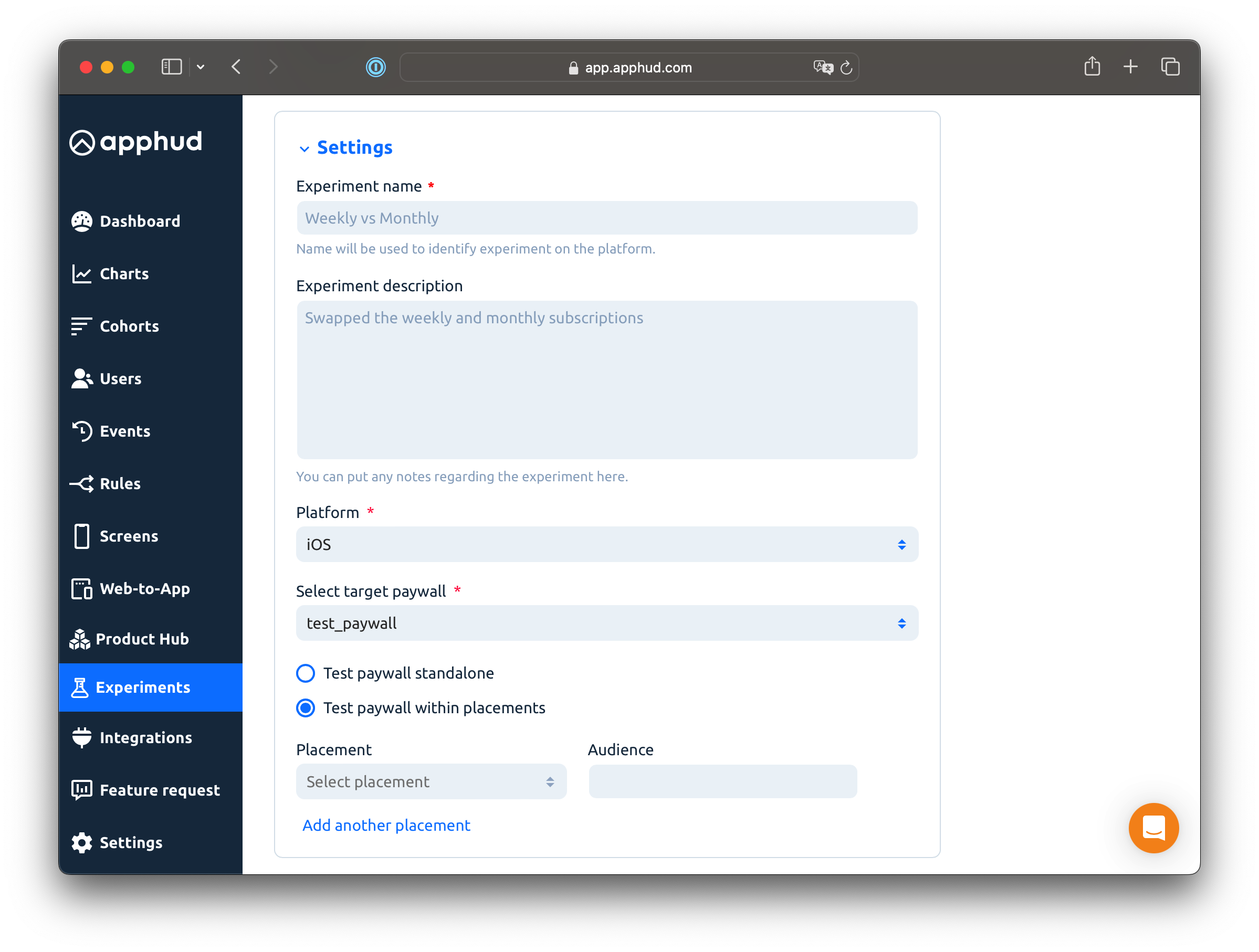

Experiment Name and Description: You can enter the name of your test here, and optionally add a description to provide more context.

Platform: Each experiment can be conducted on only one platform at a time. If your app is available exclusively on either iOS or Android (not both), the platform selection will be automatically tailored to your app's availability.

Target Paywall: Select one of your existing paywalls to use as the baseline (control) variation in your experiment. This paywall will act as the standard against which other variations are compared.

Test Paywall Standalone: This traditional approach to A/B testing involves altering the paywall object with one of the variations. Standalone paywalls can be accessed using the Apphud.paywalls() method in the SDK or a similar function.

Test Paywall within Placements: This newer and recommended method allows for more targeted paywall A/B testing. By testing within specific placements, you can assess different paywalls for specific audience segments. This approach ensures that the testing is confined to a particular area within the app, leaving other sections unaffected.

Variations

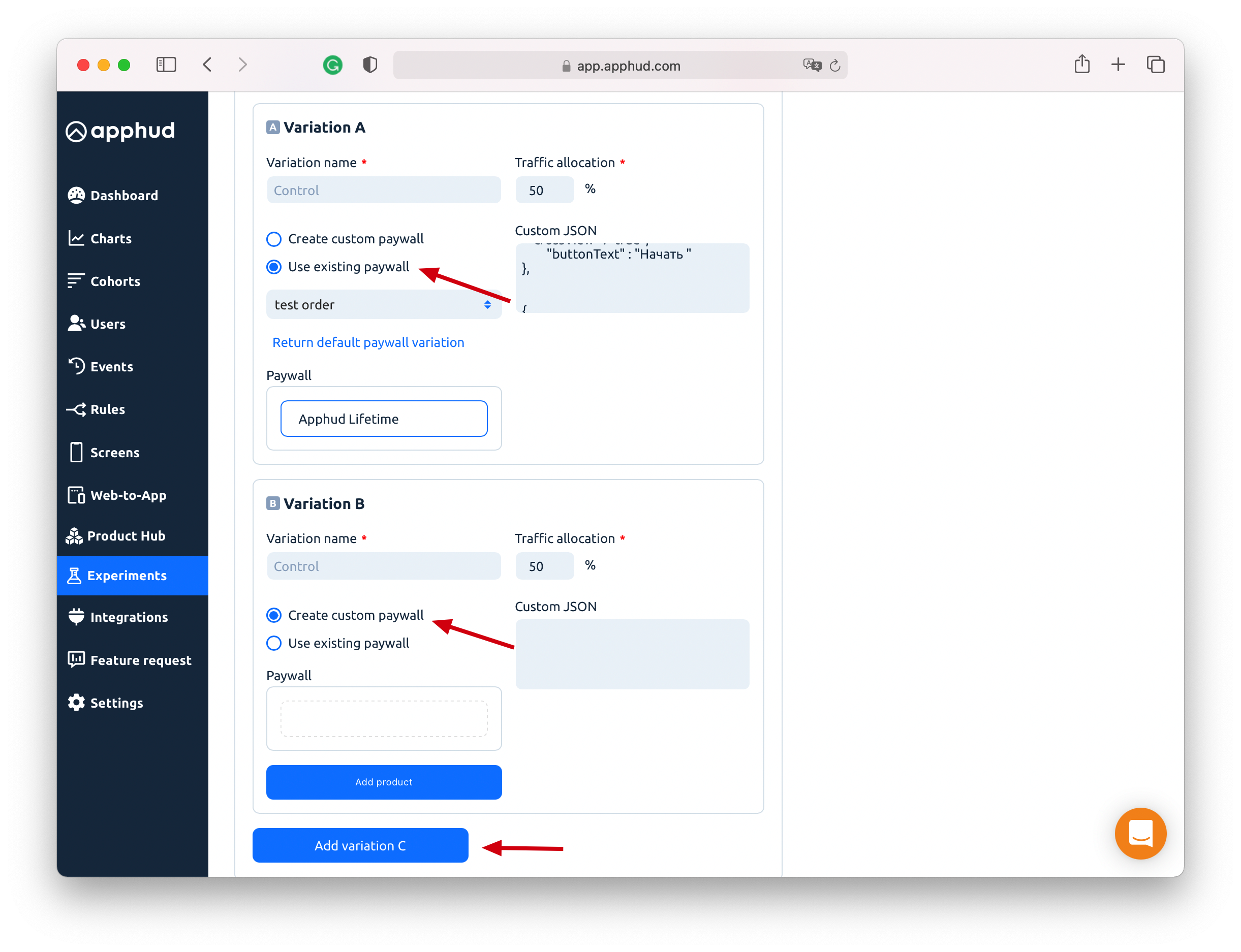

You have the option to define up to 5 distinct variations for target paywall. For each variation, you are required to specify a unique combination of three elements: a custom JSON configuration, a distinct variation name, and a specific set of products to which it applies. Additionally, there is an option create a new variation by duplicating an existing paywall.

Variation NamingWhen creating A/B tests, avoid using special symbols such as $, *, ., or similar characters in variation names, as these are not supported by the system. For best results, use alphanumeric characters and underscores.

Traffic Allocation: This feature manages the distribution of traffic (users) among different variants. By default, the distribution is set to a 50/50 split. However, these allocation percentages can be adjusted at any point during the experiment's runtime to suit your testing needs.

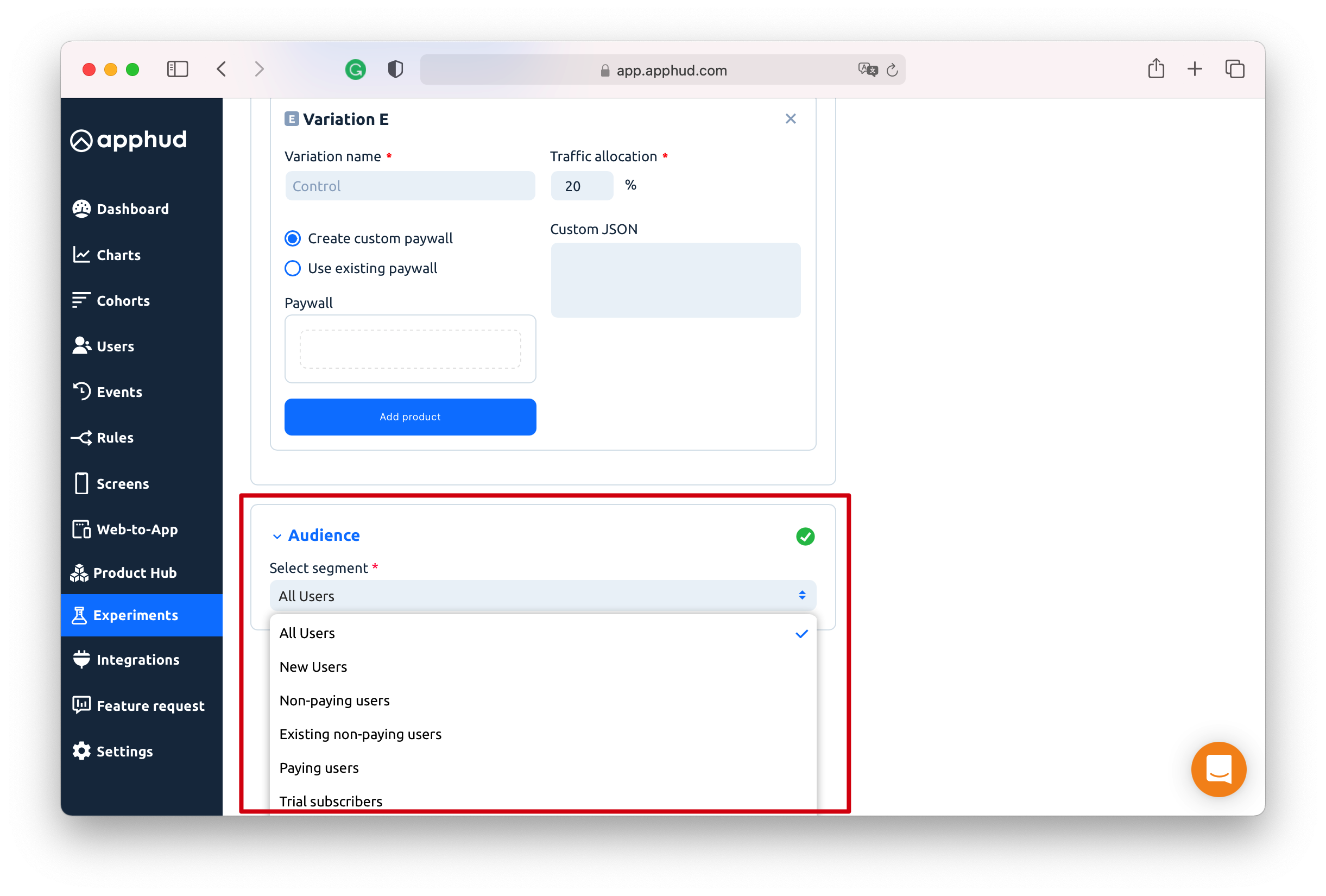

Audience

Audiences are designed to segment users based on various criteria, such as whether they are new or existing users, their country, app version, and more. Read more.

For your experiment, you can either select from pre-existing default audiences or create a custom audience tailored to your specific requirements. This flexibility allows for more targeted and effective experimentation.

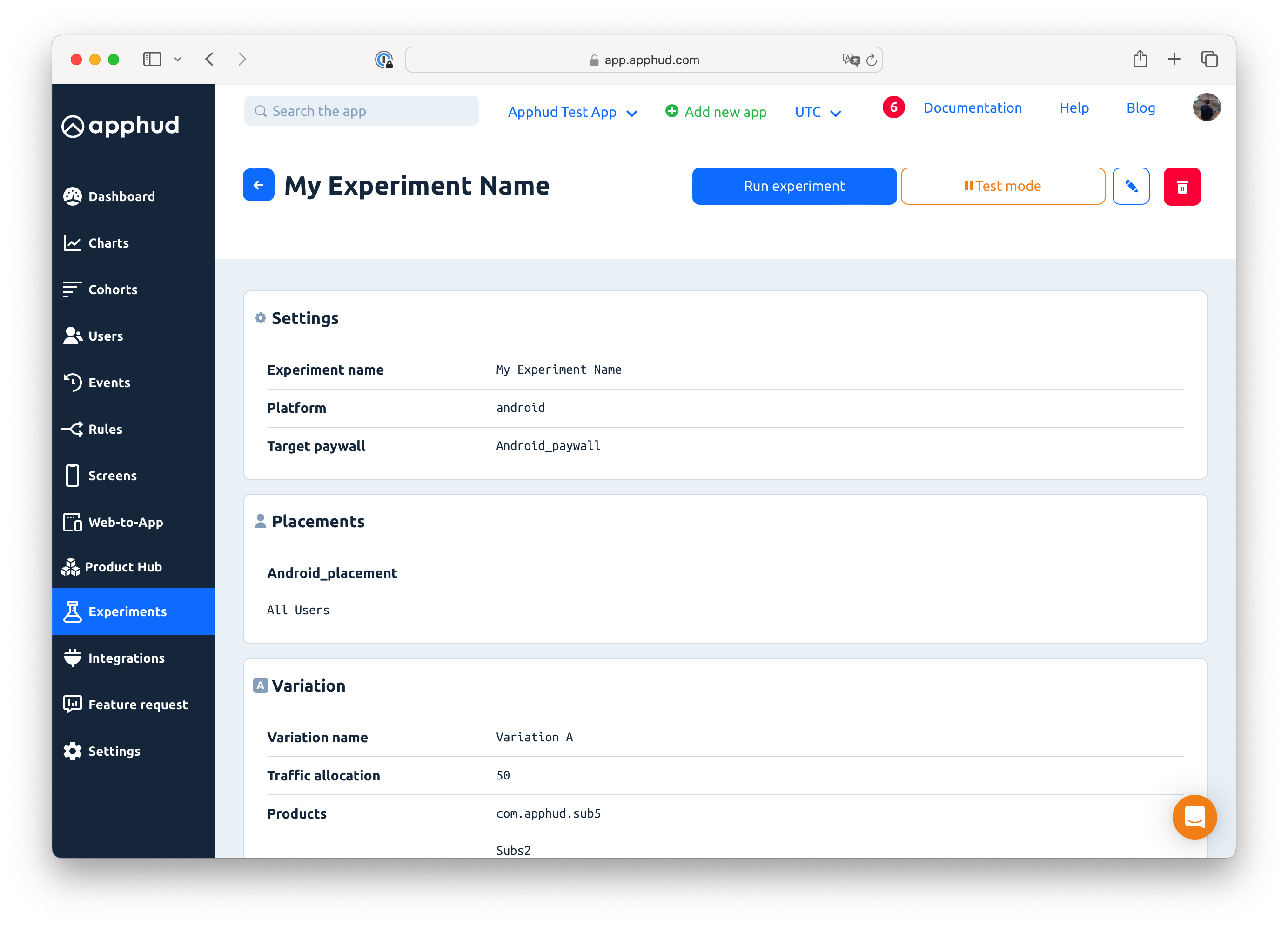

Once all experiment settings are configured to your satisfaction, click "Save and review." This action takes you to a summary page where you can perform a final review and verification of all settings before finalizing your experiment setup.

Test Mode

You can test your variation setups on a device before launching an experiment. This feature allows you to preview the paywalls that will be shown to users for each A/B test variation.

Only Sandbox Users supportedTest Mode is available only for sandbox users, i.e. Xcode, TestFlight, Android Studio installs.

Key Points

- Audience Step Skipped. During test mode, the audience selection step is bypassed. This enables you to test your variations regardless of the selected target audience.

- Sandbox Only. Remember that Test Mode applies only for Sandbox Users at this time. All sandbox users will be assigned to the tested variation, regardless of their previous history, subscription status, etc.

- Keep in Mind about Experiments Priorty. If there is another Live Experiment or another Experiment with Test Mode on, it may override your current Experiment if it was created later than the current one. New Experiment has a greater priority than the older one.

- Keep in Mind Paywalls Cache in the SDK. There is Paywalls cache in the SDK which varies between sandbox and production modes. For Sandbox installations cache should be around 60 seconds, however, if you still don't see your test variation, try to re-install the app.

- Change Traffic Allocation to Test Another Variation. Change the Traffic Allocation of your desired variation to 100%. After testing, ensure you revert the traffic allocation value to its original setting.

This approach helps you ensure that each variation functions correctly and appears on your device as intended to your users.

Run Experiment

Once you are ensured that all data is correct click "Run experiment" to start the test. Otherwise, edit the experiment.

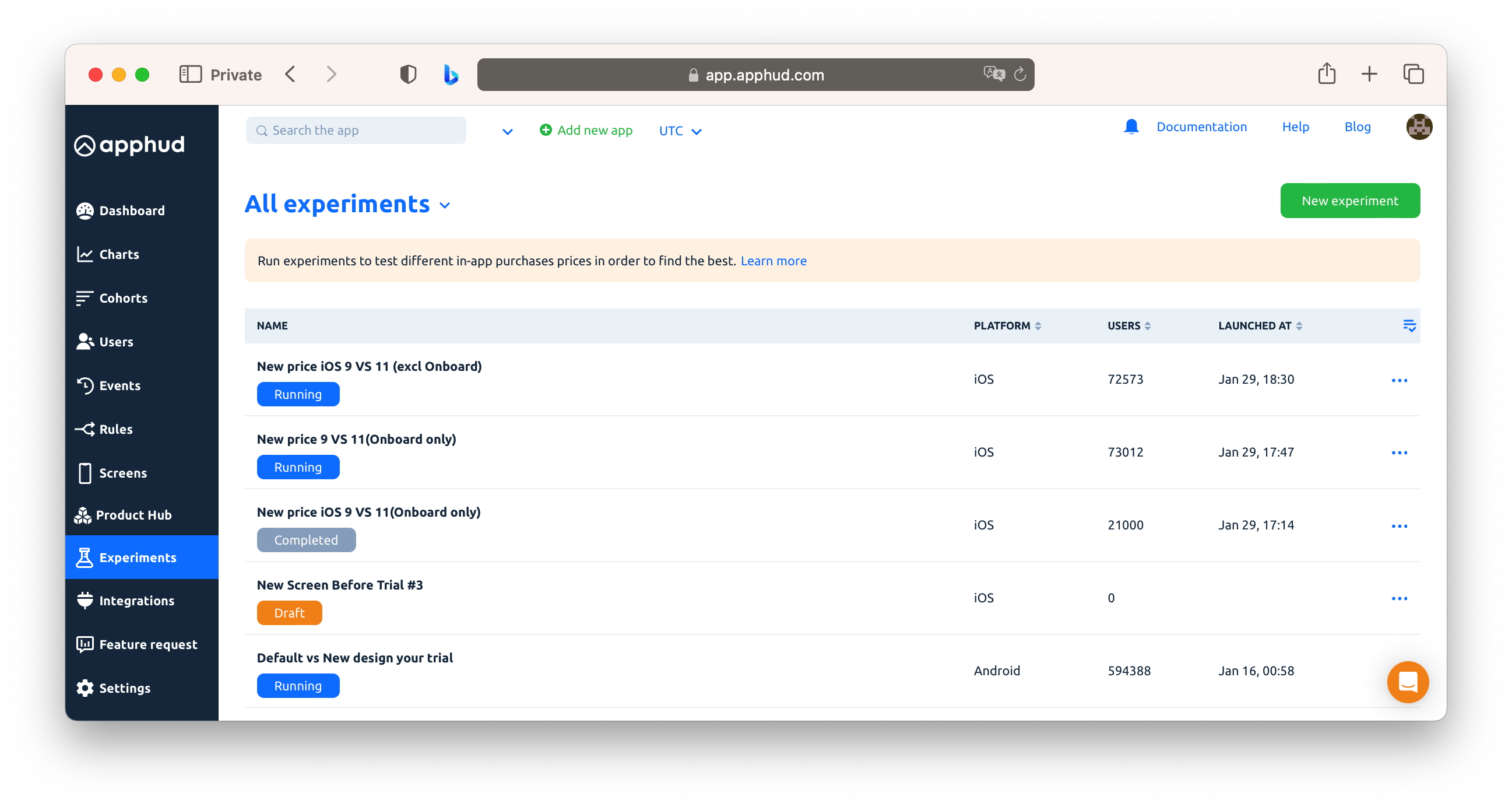

Experiment States

You may want to not run the test immediately. In such a case, the experiment will be saved as a draft. The draft can be edited and launched later.

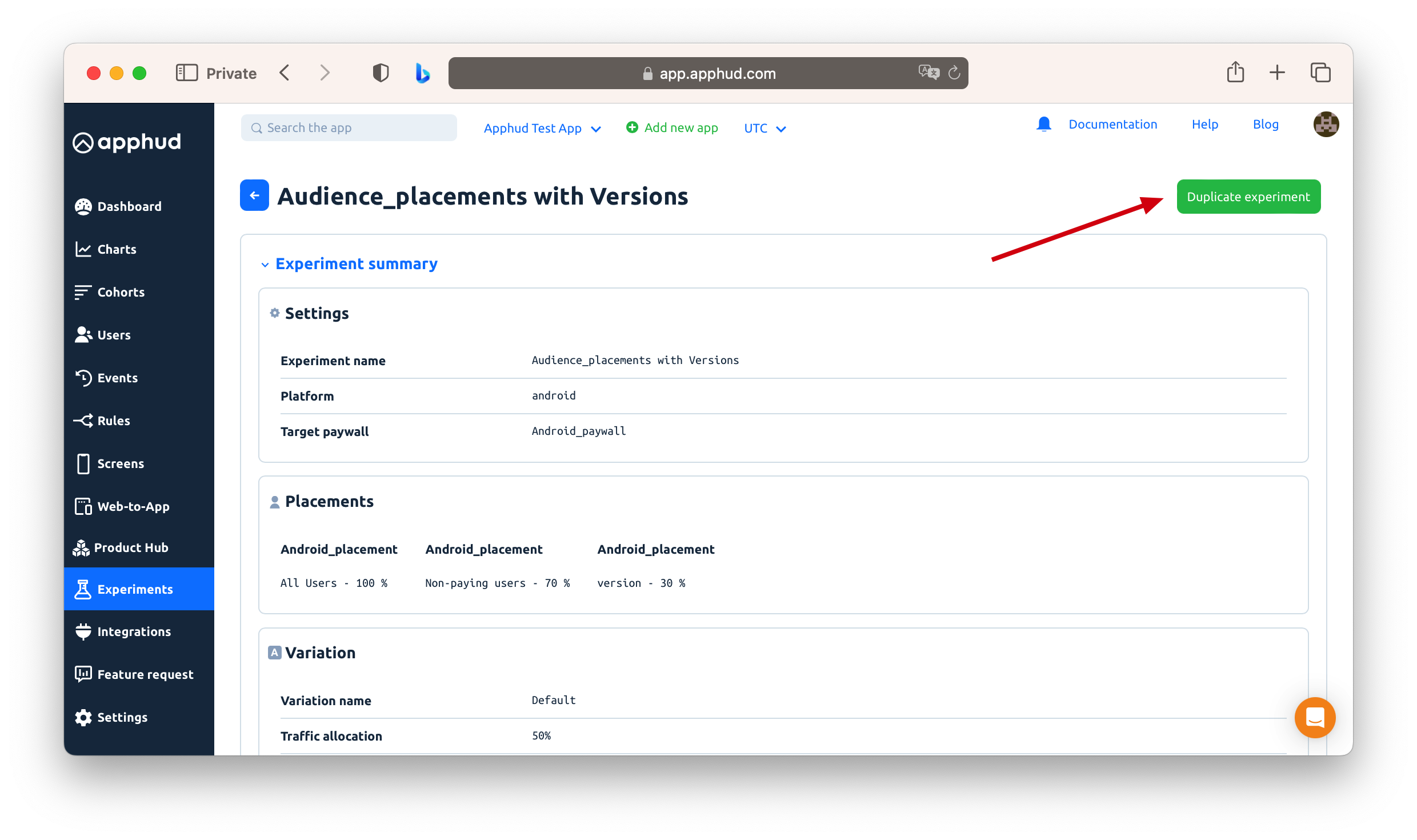

Duplicate Experiment

Click "Duplicate" in the context menu on the experiment in the list to create its copy. All experiment settings (such as target paywall, variations, and audience) will move to the new test.

This function is available for Running and Completed experiments.

Using Experiments in Observer Mode

You can use A/B experiments on paywalls and placements even in Observer mode. If you don't use Apphud SDK to purchase subscriptions, you need to specify the paywall identifier and optionally placement identifier which was used to purchase a product. If you pass the paywall identifier correctly, experiment analytics will work as expected.

iOS:

You need to specify paywall identifier and optionally placement identifier before making a purchase using willPurchaseProductFrom method:

Apphud.willPurchaseProductFrom(paywallIdentifier: "paywallID", placementIdentifier: "placementID")

YourClass.purchase(product) { result in

...

}Android:

You need to specify paywall identifier and optionally placement identifier after making a purchase using trackPurchase method:

// call trackPurchase method after successful purchase

// always pass offerIdToken in case of purchasing subscription

// pass paywallIdentifier and placementIdentifier to correctly track purchases in A/B experiments.

Apphud.trackPurchase(

purchase: Purchase,

productDetails: ProductDetails,

offerIdToken: String?,

paywallIdentifier: String? = null,

placementIdentifier: String? = null)Complete and Evaluate

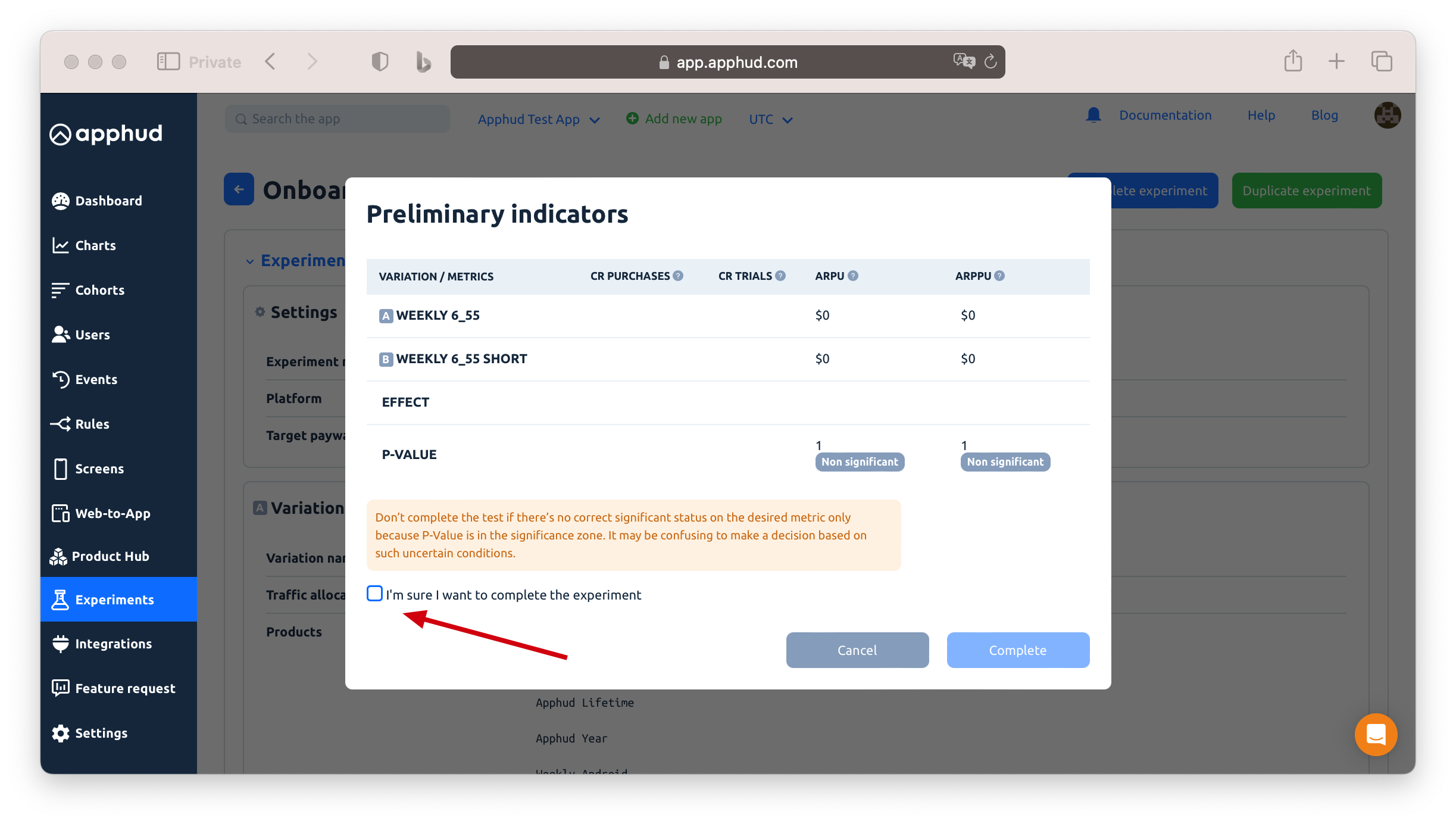

When you see significant results on the desired target metric, you can complete the experiment.

If the metric fails to show significance over an extended period, it may be a signal to re-evaluate the experimental conditions. Consider conducting a new test with more pronounced variations in pricing and paywall parameters, as this could amplify the potential impact of these changes and lead to more significant results.

Learn more how to analyze experiment data in the Analyzing Experiments guide.

FAQ

If a user already has an active subscription and matches the Experiment Audience, will they be included or excluded from the experiment?

Any user who meets the Experiment Audience conditions will be assigned to a variation as soon as they launch the app, even if they already have an active subscription. Current subscription status is not checked at the moment of assignment — only the Audience conditions are.

If you don’t want subscribers to take part in the experiment, you can exclude users with an active subscription in two ways:

- Filter them out in the Audience Add an extra condition like “In-app subscription status is not active” (or include only non-active statuses such as “expired / none”), so users with an active subscription will never be assigned to this experiment.

- Handle it in your app logic Keep the Audience broad, but in your code only show the paywall to users who don’t have an active subscription.

Example: You’d like to run an experiment on 100% of users with Country by IP = Mexico. You create an Audience with this condition and set experiment allocation to 100% of this Audience.

After the experiment starts, any user whose Country by IP is Mexico will be assigned to the experiment when they launch the app — whether they have an active subscription or not. If your app hides paywalls for users with an active subscription, these subscribed users will still be counted as part of the experiment audience (in the Users metric), but they will never see the tested paywall.

This is why experiment analytics may show more Users (assigned to the experiment) than Unique Views (users with actual paywall impressions). Conversions are always calculated starting from Views, not from all assigned users.

What happens if a user satisfies both the placement's top priority paywall conditions and the audience conditions for an experiment running within the placement but for the lowest priority paywall?

If a paywall within a placement is part of an A/B test, it takes precedence over other paywalls. The user will see the paywall associated with the test, even if another paywall is configured as the highest priority for that audience in the placement settings.

For example, Onboarding placement has the highest priority paywall that targets users from the US, and it has another paywall for “All users” audiences, which has the lowest priority within the placement. When an experiment is started for the paywall used for “All users” within Onboarding placement, new users from the US will be shown the paywall from the experiment.

Can the same user participate in multiple experiments at once?

Yes. A user can be part of multiple experiments, but with the following rules:

- Different Paywalls: If the experiments target different paywalls, the same user can take part in both.

- Same Paywall in Different Placements: If the same paywall is used across different placements, experiments in those placements are treated as independent. A user can therefore also participate in both experiments.

- Same Paywall & Same Placement: Only one experiment can run per paywall–placement pair for a user at a time.

This way, a user may be included in several experiments simultaneously, as long as each experiment has a unique paywall–placement context.

If a user was marked to take part in the experiment “Test 1” and a new “Test 2” is launched on the same placement paywall, which paywall will the user see?

If “Test 1” is still Running, the user will not be marked for “Test 2” experiment and will continue to see the paywall from “Test 1”. If “Test 1” has Completed by the time “Test 2” starts, and the user meets the audience criteria for “Test 2”, they will be included in Test 2 and shown the corresponding paywall.

What happens if a user is included in an experiment targeted to a certain audience, but later updates and no longer belongs to the audience covered by the test?

The user will remain marked in the experiment and continue participating as long as the experiment remains active. The app version update does not exclude the user from the Running experiment. For example, if a user is included in an experiment targeted to a certain app version but later updates to a newer app version, he will still be shown the paywall as the experiment defines. Similarly, if the experiment is targeted to a certain location by Store country, the user will remain marked and take part in the experiment, even if they change their Store Country.

I set “New Users = 100%” for my experiment, but when I run it in Test Mode on a device with an existing app installation, I still see the test variation. Why?

This happens because in Test Mode audience filters and user history are ignored. Any sandbox user — regardless of whether they have prior installs, purchases, or current subscription status — will always be assigned to the test variation with 100% traffic. Test Mode is designed only for verifying that your paywalls and variations display correctly, not for testing how users are split by audience or subscription conditions.

Can a user be assigned to an experiment after certain actions in the app (e.g., closing the onboarding paywall without purchase) rather than immediately after the app launch?

Yes, but it requires additional setup on the developer’s side. This may be necessary if you must ensure a clean experiment allocation for an audience that cannot be predefined and depends on user behavior and actions. By default, users are assigned to experiments after the app launch, when placements/paywalls are fetched for the first time. If you want to re-assign users later, for example, only include those who closed the onboarding paywall without purchasing, you need to:

- Define a custom user property. For example: "first_paywall_closed"

- Define a custom audience based on this property value. For example: "first_paywall_closed" = true.

- Set up an experiment for the custom audience where the target paywall is the one shown after the onboarding.

- Set this property for the user and flush it immediately after the key user action occurs (e.g., when the onboarding paywall is closed without a purchase)

- Refresh user data and fetch placements/paywalls after flushing.

Apphud.setUserProperty(key: .init(“first_paywall_closed”), value: true)

Apphud.forceFlushUserProperties { result in

Apphud.refreshUserData { _ in

Apphud.fetchPlacements { placements, _ in

// placements are refreshed completely

}

}

}This ensures Apphud re-evaluates the user against the updated audience and assigns them to the correct experiment tied to the follow-up “post-onboarding” paywall. This way, users who initially saw the onboarding paywall but didn’t purchase will be funneled into the experiment for the “post-onboarding” paywall and will be evenly distributed by the experiment variations.

For more details, please check: Audiences based on custom User Properties and Custom audiences in Experiments.

Why don’t sandbox user purchases and views appear in my A/B experiment analytics?

Sandbox transactions are excluded from all aggregated analytics in Apphud, including:

- Experiment metrics (e.g., ARPU, View to Purchase, Views, Users, etc.)

- All Charts and Dashboard statistics

- Total Spent fiend in the Users list

Only production users' events and purchase data are processed for these calculations. Sandbox purchases are shown in the user’s event timeline for testing purposes, but they are not counted in aggregated reports or experiment results.

Why isn’t the user distribution exactly 50/50 between variations?

This is expected behavior. The assignment algorithm doesn’t alternate users strictly (e.g., the first user to A, the second to B). Instead, it applies internal balancing and quality metrics to ensure consistent experiment integrity. Because of this, early distribution may look uneven — for example, 140 vs. 112 users. As the sample size grows, the proportions tend to balance out. A perfect 50/50 distribution is not required and should not be expected.

I don't see Variation B name associated with Paywall Shown in Events, while Experiment statistic has Unique Views for that variation >0. Why?

All events logged for the experiment belong to the Target Paywall and can be tracked under the Target paywall name in Events as well as in analytics reports in Charts or Cohorts. Paywalls used as Variations are virtual entities of the Target paywall, and statistics relevant to the variations should be checked in Experiment Analytics.

For the experiment with "All Users" audience, why does the number of paywall openings in the Experiment statistics differ from the number in Events?

Several factors can explain this difference:

- Repeated openings– a single user can open the paywall multiple times, so Events may contain more total events than the "Unique Views" value in the experiment statistics.

- Data update delay – experiment reports are updated with a slight delay (up to 1h) compared to the raw events, as some time is required for the metrics prep.

- Events before experiment start – paywall openings that occurred on the same day but before the experiment was launched appear in Events when filtering by date, but are not included in the experiment statistics.

- Sessions started before the experiment – Events can have Paywall shown events of users, who launched the app before the experiment started and did not relaunch it during the experiment run, keeping the app open in the background. Such users are not marked for the experiment. Therefore, even if you see a Paywall shown event matching the experiment’s target paywall name, those actions are not counted in experiment data, as the user is not a part of the Experiment. User assignment to an experiment occurs only when the app is launched, not when it returns from background state.

Updated 3 months ago